2. Random Variables

1 Random Variables

Random variable, informally, is a variable that takes on numerical values and has an outcome that is determined by an experiment.

Random Variable: Let be a sample space with a probability measure. A random variable (or stochastic variable) is a real-valued function defined over the elements of S.

Important convention: Random variables are always expressed in capital letters. On the other hand, particular values assumed by the random variables are always expressed by lowercase letters.

Remark: Although a random variable is a function of ; usually we drop the argument, that is we write ; rather than .

Remark:

Once the random variable is defined, R is the space in which we work with;

The fact that the definition of a random variable is limited to real-valued functions does not impose any restrictions;

If the outcomes of an experiment are of the categorical type, we can arbitrarily make the descriptions real-valued by coding the categories, perhaps by representing them with the numbers.

Example 1.1 One flips a coin and observes if a head or tail is obtained.

Sample Space:

Random Variable:

The definition of random variable does not rely explicitly on the concept of probability, it is introduced to make easier the computation of probabilities. Indeed, if , then

Is now clear that: In particular,

2 Cumulative Distribution Function

2.1 Cumulative distribution function

Let be a random variable. The cumulative distribution function is a real function of real variable given by:

Properties of CDFs:

is non-decreasing:

and

for

therefore is right continuous

for any real finite number.

Example 2.1 One flips a coin and observes if a head or tail is obtained.

Sample Space:

Random Variable:

counts the number of tails obtained.

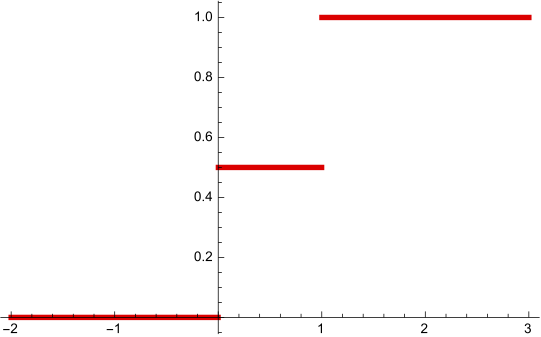

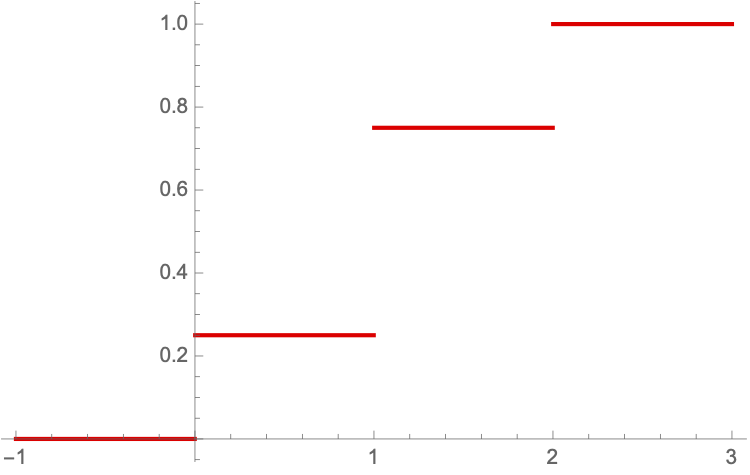

It is easy to see that: , . Since we have , then

Example 2.2 One flips a coin twice and counts the number of tails obtained.

Sample Space:

Random Variable:

,

It is easy to see that: , for and . Since we have , then

Further properties:

Prove the previous properties!

Proof: To prove that , one notes that:

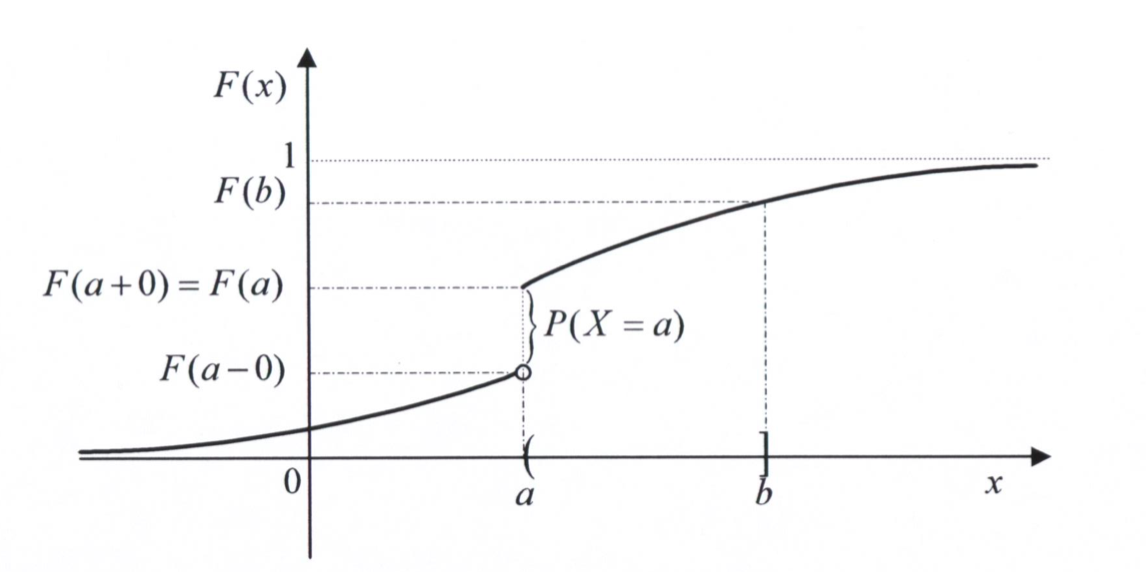

The set of discontinuities of the cumulative distribution function is given by Note that by property 6 this the same as

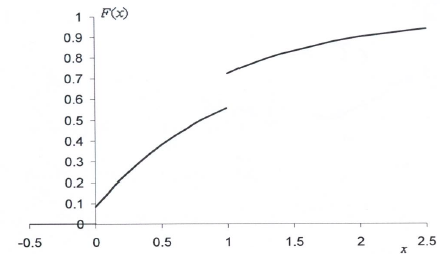

2.2 Types of random variables

Discrete Random Variable: is a discrete random variable if

Continuous Random Variable: is a continuous random variable if and there is a non-negative function such that

Mixed Random Variable: is a mixed random variable if

where is a discrete random variable and is a continuous random variable.

3 Discrete Random Variables

is a discrete random variable if

Additionally, the function defined by

is called the probability mass function (pmf).

Theorem: A function can serve as the probability function of a discrete random variable if and only if its values, , satisfy the conditions

For discrete random variables, the cumulative distribution function (cdf) is given by :

Generally,

Theorem: If the range of a random variable X consists of the values , then

Example 3.1 Check whether the function given by , for can serve as the probability function of a discrete random variable . Compute the cumulative distribution function of .

4 Continuous Random Variables

4.1 Continuous Random Variables

is a continuous random variable if and there is a function such that

Additionally, is called the probability density function.

Remark:

Continuity of is necessary, but not sufficient to guarantee that is a continuous random variable;

Note that ;

The function provides information on how likely the outcomes of the random variable are.

4.2 Probability Density Function

Theorem. A function can serve as a probability density function of a continuous random variable if its values, , satisfy the conditions:

for ;

.

Example 4.1 Let be a continuous random variable with a probability density function given by

Find the value of the parameter .

According to the previous theorem, we know that

From the second condition, we get that

.

Theorem. If and are the values of the probability density and the distribution function of at , then

for any real constants and with , and

Remarks:

At the points where there is no derivative of the CDF, , it is agreed that . In fact, it does not matter the value that we give to as it does not affect the computation of .

The probability density function is not a probability and therefore it can assume values bigger than one.

If is a continuous random variable

Example 4.2 Consider the continuous random variable with a probability density function and cumulative distribution function given by

Cumulative density function:

Is this function differentiable?

Theorem: If is a continuous random variable and and are real constants with , then

Proof: To prove the previous theorem one needs notice that:

Additionally, for or we have

Remark: The previous inequalities are not necessarily true for discrete random variables.

5 Mixed random variables

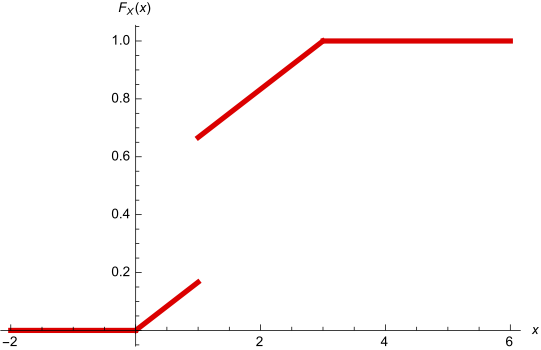

Mixed Random Variable: is a mixed random variable if

where is a discrete r.v. and is a continuous r.v..

Example 5.1 A company has received 1 million € to invest in a new business. With probability , the firm does nothing but with probability the money is invested. If it does not invest the money, million € is kept. Otherwise, the firm gets back a random amount uniformly distributed between and million €.

Let be the following random variable: What type of random variable is ?

is not a discrete r.v. because it takes values in a continuous set;

is not a continuous random variable because (For continuous random variables the probability to take one single point is equal to ).

is a mixed random variable?

We can define two random variables:

Since , then

On the other hand, in scenario 2, the firm gets back a random amount uniformly distributed between and million €. Therefore,

Since S1 holds with probability and S2 holds with probability , we have that

, because

Exercise: Let

Compute and .

Answer:

6 The Distribution of Functions of Random Variables

Motivation: Assume that the random variable represents the demand of a given product in a store. The profit of this store is represented by the random variable . If the probability function of is given by

what is the probability of having ?

Since is a random variable, it should be possible to find its distribution. How to do it?

Let be a known random variable with known cumulative distribution function .

Consider a new random variable , where is a known function. Let be the cumulative distribution function of How can we derive from .

The derivation of is based on the equality

where

Example 6.1 Derive the cumulative distribution functions of where and .

For ,

6.1 Functions of Continuous Random Variables

Assume that in the previous example is a continuous random variable such that

then the following holds:

Example 6.2 Assume that in the previous example is a continuous random variable such that

then the following holds:

If then . When

6.2 Functions of Discrete Random Variables

When is a discrete random variable, it is easier to find the distribution of . In this case, we will derive the probability function.

Let be the set of discontinuities of then is the set of discontinuities of

The probability function of is given by

Example 6.3 Consider the discrete random variable with probability function

| x | -2 | -1 | 0 | 1 | 2 |

| 12/60 | 15/60 | 10/60 | 6/60 | 17/60 |

Let what is

Firstly: The set of discontinuities is

| x | -2 | -1 | 0 | 1 | 2 |

| 4 | 1 | 0 | 1 | 4 |

Consequently

.